Artificial intelligence | 22 Mar 2024 | 20 min

Diffusion Model: The Brain Behind Multimodal LLMs

Hey AI,

Create an underwater scene with glowing animals and plants, surrounded by old shipwrecks, fish, and coral.

🤖 (Diffusion Models): For sure! Here you go:

Fig: AI-Generated Image

Such instantaneous magic is now a reality and is in working – all thanks to the various kinds of Generative AI models!

In 2024, organizations are increasingly harnessing the capabilities of GenAI models such as Generative Adversarial Networks (GANs), Transformer Models, and Variational Autoencoders to elevate their productivity across multiple use cases while streamlining resources, time, and expenses.

However, the phenomenon you observed above is because of the Diffusion Model. What’s that?

Answer: In this blog, I’ll help you get into the depths of the Diffusion Model, its benefits, and intricacies. You will also gain insights into various Diffusion Models, empowering you to apply them in solving real-world challenges.

So, let’s get started!

The diffusion model is a technique that stands at the forefront of the latest advancements in Multimodal Large Language Models (MLLM). It is utilized to produce lifelike images from random noise, mimicking real-world visuals with remarkable accuracy.

Generating high-quality images from text used to be hard, needing deep understanding and skill. But diffusion models have changed this, making the process simpler with minimal text input.

Fact: Did you know? GANs use a generator and discriminator to create images, whereas the diffusion model uses random noise as input to achieve similar results.

Using diffusion models, you can generate images in two ways:

Let me clarify by providing a better understanding of its potential.

Diffusion models have numerous advantages compared to traditional generative models like GANs and VAEs. Basically, these benefits arise from the unique data generation and reverse diffusion process of the diffusion model. Here are some of them:

1. Good Image Quality: Diffusion models create highly detailed and realistic images by understanding data patterns and using reverse diffusion, resulting in fewer errors and more organized structures compared to conventional GANs.

2. Stable Training: They are more reliable than GANs in training because they use stable likelihood-based methods, avoiding issues like mode collapse that GANs face due to complex learning rate adjustments.

3. Handling Data Privacy Issue: They are a good choice to handle data privacy issues, as they use special techniques that allow us to generate synthetic data without revealing the real information from the original data.

4. Handling Missing Data: They can easily handle the missing data concerns by generating new images that fill gaps through reverse diffusion. This allows the model to produce complete output images even if parts of the input images are missing.

5. Robustness to Overfitting: They are better at avoiding overfitting than GANs. They achieve this by using likelihood-based training and reverse diffusion, which stops them from memorizing specific examples. Instead, they generate a wider range of realistic images.

6. Interpretable Latent Space: They offer more latent spaces compared to GANs. Adding latent variables helps to capture variation and sample diversity. Reverse diffusion simplifies data distribution, enabling meaningful representation, insights, and precise control over generated images.

7. Scalability to High-Dimensional Data: They can easily handle high-resolution images as they use a step-by-step diffusion process, which helps them to efficiently generate complex, detailed images without struggling with the large size of the data.

Curious about how all of this happens?

Well, next, you’ll dive into the workings of diffusion models and how they generate high-quality images.

Here’s the core procedure of how every diffusion model works:

Now, let’s understand each step without diving into the mathematical details:

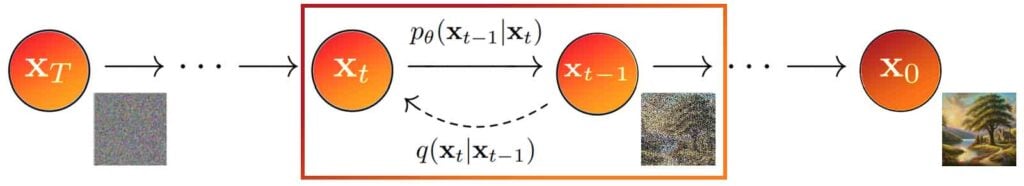

Fig: Forward and Backward/Reverse Diffusion process

Another advantage of the iterative nature of this diffusion process is that we can conduct supervised training at each step. In diffusion models the popular architecture used is the UNet (with attention) trained with the Mean Squared Error (MSE) loss function in a supervised manner.

During each step, the amount of noise added to an image is regulated by a variance scheduler or simply a scheduler. The scheduler’s role is to determine the appropriate level of noise addition, ensuring that the image at the end of the forward process denoted as XT, conforms to an isotropic Gaussian distribution.

We can also use a linear scheduler, meaning that the noise added at each step increases linearly. The number of steps denoted as T was set to T = 1000. Therefore, with Gaussian noise, the model required 1000 iterations to produce the result reflecting the low sampling rate issue. However, recently new techniques and different schedulers have enabled researchers to generate artistic images in as few as T = 4 steps.

Try this code to generate an image based on a given text prompt using the Stable Diffusion XL model:

pipe = StableDiffusionXLPipeline.from_pretrained("segmind/SSD-1B", torch_dtype=torch.float16,

use_safetensors=True, variant="fp16")

pipe.to("cuda")

prompt = " A lonely women standing on a deserted beach at sunrise, silhouette against

the orange sky, waves crashing at her feet " # Your prompt here

neg_prompt = ""chaotic, unclear, poor quality" # Negative prompt here

image = pipe(prompt=prompt, negative_prompt=neg_prompt).images[0]

Got the gist and flavor of its functionality? Great!

On we move to know about its applications across various sectors. 😀

Diffusion models can be used in multimodal large language models in various ways directly or indirectly. Some of its applications across industries are listed below:

1. Text to Video: By expanding on the idea behind text-to-image conversion, we can apply diffusion models to produce videos based on narratives, songs, poems, and other textual content.

Industry use case: Software companies can use diffusion models to create tutorial videos for their products, improving user onboarding and satisfaction.

2. Text to 3D: By applying concepts like those used in text-to-image conversion, we can create three-dimensional representations based on textual descriptions.

Industry Use Case: In the banking sector, diffusion techniques can be applied to generate 3D visualizations of financial data and trends. Thus, helping analysts for informed decision-making.

3. Text to Motion: This is a new approach using diffusion models to create realistic human movements in computer animation. It aims to accurately capture the nuances of human motion, which is challenging due to the complexity of human movements. Efficient training of this model can lead to high-quality results, surpassing other methods for translating text or actions into movement.

Industry Use Case: Healthcare organizations can use this for rehabilitation programs, where realistic movements are essential for faster patient recovery.

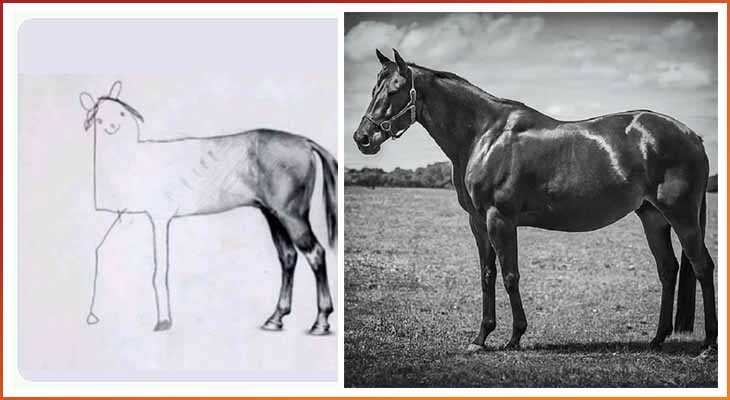

4. Image 2 Image: This is a method to change images based on text prompts, creating new images with different styles or appearances. It converts an existing image into a new one by applying specified changes described in the text. Here’s an example:

Fig: Finished the unfinished image

Industry use case: Quality control departments in the manufacturing sector can use this to enhance images of defective parts for better analysis and identification.

We’re nearing the conclusion of this blog, but before we wrap up, I encourage you to explore some diffusion models and leverage their capabilities to enhance your operations.

Here are some of the popular Diffusion-based Image Generation models that have gained attention in recent months:

1. Sora by OpenAI: This revolutionizes video production, generating media from text input with exceptional precision. It operates on diffusion principles, refining images from noise over multiple iterations, offering single or extended video production.

2. DALL-E 3 by OpenAI: This enhances image generation from text, utilizing CLIP for robust image learning. Its two-stage model preserves semantics and style while modifying non-essential details, enabling precise image generation.

3. GLIDE by OpenAI: This is a text-conditional image synthesis model, producing high-quality images from textual prompts. It employs a 3.5 billion parameter diffusion model, offering editing capabilities for complex prompts.

4. Imagen by Google: This is a text-to-image diffusion model, using large pretrained frozen text encoders and diffusion models for image synthesis. It enhances guidance weights without classifiers, improving photorealism and text alignment.

5. Gemini Pro Vision: This is a leading multimodal model that helps generate text, images, and videos. It can help with things like understanding and summarizing content from infographics, documents, and photos.

6. Stable Diffusion by StabilityAI: This uses diffusion models and latent space efficiency for image generation. It employs Latent Diffusion Models (LDMs) trained in a compressed latent space, offering state-of-the-art results with reduced computational demands.

7. Midjourney: This is an AI-driven text-to-image diffusion model known for its accurate and imaginative outputs. It allows extensive control over image generation, enabling adjustments via prompt parameters for creative manipulation.

Try using these prompts to generate images using different diffusion models:

For deeper insights into how Generative AI can help you build robust products and scale your business, reach out to us at Nitor Infotech.

Until next time, happy prompting!

we'll keep you in the loop with everything that's trending in the tech world.