Artificial intelligence | 28 Feb 2024 | 14 min

Understanding Variational Autoencoders

If you’ve been looking to read about a highly powerful generative tool in the machine learning realm, you have arrived at the right place.

Allow me to begin with a confession.

As I sat down to write this blog and started hunting for everything I needed to know about variational autoencoders (VAEs), one word that was used to describe VAEs and jumped out to catch my attention was ‘beautiful’.

I was slightly surprised at the choice of adjective, but I grew more and more convinced about it as I continued my search.

As we set off on our exploration of VAEs in today’s blog, let’s first understand what autoencoders are.

An autoencoder is an unsupervised ML algorithm. The purpose of its existence is to achieve a low(er) dimensional representation of your input. This idea is quite deep if you muse over it.

First, let’s understand what a representation is. It is how you opt to describe something, in a manner that works suitably enough for your purposes.

An autoencoder:

Now, what is a VAE all about? Let’s take a look.

Variational autoencoders (VAEs) are generative models that offer a probabilistic way for illustrating an observation in latent space.

They are plainly designed to capture the underlying probability distribution of a given dataset and come up with new samples.

While using generative models, you might merely wish to create a random, fresh output, that looks like the training data, and you can do that with VAEs. But it’s more likely that you’d want to change or discover variations on data you have. You’d want to do this in a desired, particular direction, rather than randomly. This is where VAEs work better than any other method currently available.

As you can imagine, it’s not a plain vanilla concept. So how is it different from vanilla autoencoders, then? Read on…

| Vanilla autoencoder | Variational autoencoder |

|---|---|

| The model is trained by minimizing the difference between the initial input and the rebuilt output. This basic format is referred to as the vanilla autoencoder. | A VAE learns a generally, normal distribution for the vectors in the latent space. In other terms, there are several ways for images to be encoded into and decoded from the latent space. |

| Linear samples between two encodings cannot lead to great generated samples. The reason is that there are discontinuities in the embedding space that don’t lead to smooth transitions. | The latent spaces of VAEs are continuous (design-wise). This leads to smooth random sampling and interpolation. |

Now that you know the difference between the two, let’s take a closer look at a VAE…

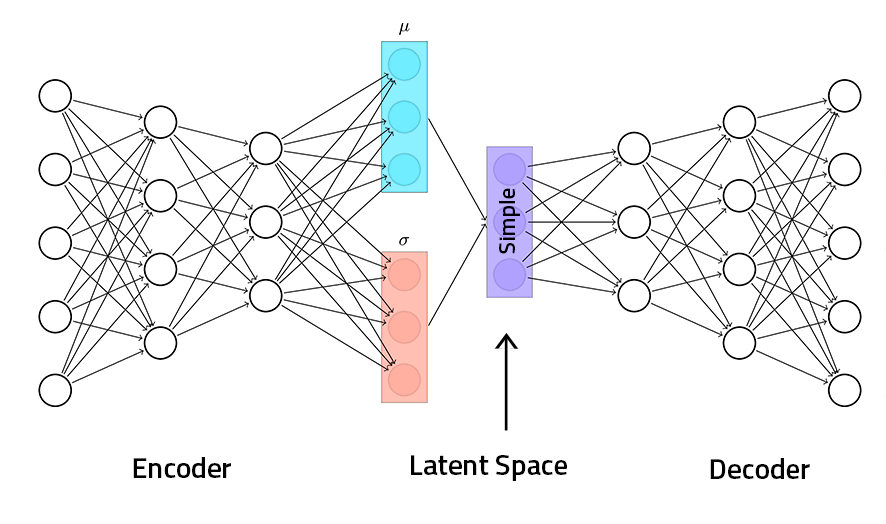

The VAE architecture comprises two parts — the encoder and decoder.

You use the encoder to map the input to a latent space. Meanwhile, the decoder maps the latent space back to the primary input.

Since the decoder is used to reconstruct input information, it’s created as the precise opposite of the encoder. The encoder and decoder together form the VAE’s bowtie shape.

Fig: Architecture of the VAE

Now that you are familiar with the architecture, let’s look at what happens during the training of a VAE.

In the world of VAEs, machines are trained to understand the essence of data and then unleash their imaginative powers. In this section, we’ll unravel the secrets behind the scenes and explore what happens during the training of a VAE.

Fig: Training of a VAE

Now, let’s jump into how VAEs function in the real world!

Here are some significant applications of VAEs across industries:

Fig: Applications of VAEs

Time to look at what is on the horizon…

In a nutshell, VAEs have the capacity to transform generative AI as we know it. As they go through development and improvement, they will empower newer applications across various industries, supporting tons of creativity.

The path to mastering VAEs could be difficult, but the rewards are many. Thanks to VAEs, we have a dynamic tool to navigate the computational landscape smoothly.

Well, VAEs are beautiful, there’s no doubt.

Send us an email with your thoughts about the beauty of VAEs. Connect with us at Nitor Infotech to learn what we do as an Ascendion software company, and in the world of generative AI.

we'll keep you in the loop with everything that's trending in the tech world.