Artificial intelligence | 19 Jul 2023 | 16 min

Training Large Language Models (LLMs): Techniques and Best Practices

In recent years, the field of NLP has seen a remarkable transformation. This is thanks to the emergence of language models. Fantastic progress in deep learning and artificial intelligence drives these models.

They are engineered to grasp, analyse, and generate textual content. This is in a way that closely emulates human-like communication.

We have trained them on massive amounts of text data. So, Large Language Models (LLMs) have acquired a wealth of linguistic knowledge that powers ML models in various applications.

In this blog, we are going to dive straight into training a Large Language Model. Let’s get set and go!

There are two types of training:

The initial stage of training an LLM is pre-training. During pre-training, the model is exposed to a vast amount of unlabelled text data. The aim is to predict the next word in a sentence or fill in masked words within the sequence. This unsupervised learning task helps the model to learn the statistical patterns and structures of language.

Pre-training equips the LLM with a general understanding of grammar, syntax, and semantics. It allows the model to capture the relationships between words. It also helps it to build a strong foundation for language understanding.

Fine-tuning is the process of refining a pre-trained language model. You do this by training it on specific data related to a particular task.

This specialized training empowers the model to adapt its parameters and enhance its performance. This enables it to understand intricacies, nuances, and domain-specific patterns. These are necessary for generating precise and context-aware outputs.

You expose the model to targeted examples and relevant contexts. This enables it to produce more accurate and tailored responses.

Let’s now discuss some key aspects of training an LLM.

Scaling up the training of large language models (LLMs)? This requires careful consideration of hardware requirements and distribution strategies.

Here are some practical points to understand the challenges and opportunities involved:

Fig 1: Practical points to understand the challenges and opportunities involved in training LLMs

Choosing the right model architecture is crucial for training Large Language Models (LLMs) that caters to ML models. Here’s a practical and concise breakdown to help you understand the choices and select the best model:

Consider complexity, attention mechanisms, residual connections, architectural variants, and task context. You can develop effective and efficient LLMs tailored to your needs.

Let’s turn to tokenization methods.

Tokenization is a crucial step in training Large Language Models (LLMs). Here, you break down textual data into smaller units called tokens. Consider the following techniques:

Optimize the training process, track progress, put in place regularization techniques, and diversify training data. We can significantly enhance the training of Large Language Models (LLMs). These practices lead to:

They enable LLMs to understand and generate language more accurately and efficiently.

Fine-tuning is a powerful technique used in Large Language Models (LLMs). It helps to optimize their performance for specific tasks. Here’s a practical breakdown of the process:

By understanding the fine-tuning process, we can optimize the performance of large language models for specific tasks.

Fine-tuning often demands high-processing GPUs. Emerging techniques like Parameter Efficient Fine Tuning seek to minimize resource requirements.

Let’s enjoy an overview of state-of-the-art techniques!

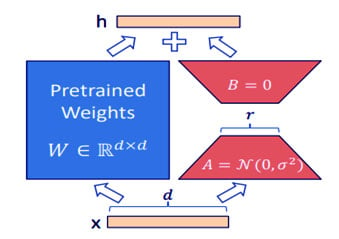

Low-Rank Adaptation (LoRA) is one of the main methods under Parameter Efficient Fine-tuning (PEFT). LoRA freezes the pre-trained model weights. It injects trainable rank decomposition matrices into each layer of the transformer architecture.

By making the middle part smaller, we can reduce the number of trainable elements. We can decrease the dimensionality of the features. This technique enables fine-tuning large models like GPT3 with 175B parameters. This is by reducing the trainable parameters up to 10,000 times.

Source: https://arxiv.org

Fig 2: The architecture of Low-Rank Adaptation (LoRA)

The diagram illustrates the architecture of LoRA. The red block on the right side represents the LoRA model’s weights that we want to train. By reducing the middle part, we can decrease the number of trainable elements and feature dimensionality to “r << d”.

The total number of elements can be calculated as “2 times LoRA times dmodel times r”. LoRA blocks are inserted into the Attention layer of the Transformer architecture. The specific value of “r” may vary depending on the task. But experiments have shown good results using values between 2 and 4.

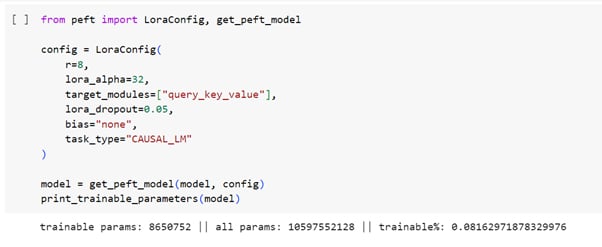

Fig 3: A code snippet that demonstrates the implementation of LoRA

The code snippet demonstrates the implementation of LoRA. We import LoraConfig and get_peft_model for specific configuration settings. We retrieve the initially fetched LLM in the PEFT model.

The code showcases the vast difference between the actual parameters of the model and the trainable parameters. This model will undergo further fine-tuning on the data.

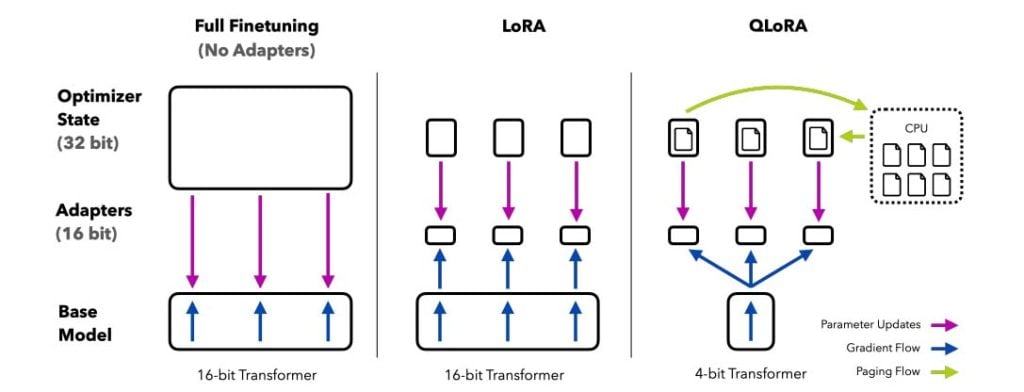

QLoRA is another method. It involves backpropagating gradients through a frozen, 4-bit quantized pre-trained language model into Low Rank Adapters (LoRA).

Quantization is a technique used to convert input data from a format containing more information to a format containing less information.

It involves converting data types that use more bits into types that use fewer bits. An example is, converting from 32-bit floating-point numbers to 8-bit integers.A picture containing diagram, text, plan, screenshot

Source: https://arxiv.org

Fig 4: The variations between QLoRA and its predecessor, LoRA

The diagram illustrates the variations between QLoRA and its predecessor, LoRA. QLoRA implements 4-bit precision quantization for the transformer model. It utilizes paged optimizers on the CPU to handle unexpected data spikes.

This practical approach enables efficient fine-tuning of LLMs like LLaMA. This reduces memory demands while maintaining performance.

Besides LoRA and QLoRA, PEFT includes other methods:

For these methods, implementation requires code using PyTorch and suitable transformers.

Tools like Generative AI Studio by Google simplify the fine-tuning of LLMs. Select the base model, upload data in JSON format, and fine-tune the model. This way, users can save time and effort.

Allow me to wrap up my ideas…

In this blog, we’ve looked at the strategies and steps involved in pre-training a large language model. We’ve also looked at an overview of state-of-the-art LLM fine tuning techniques.

Write to us with your thoughts on training LLMs. Also visit us at Nitor Infotech to see what we’ve been exploring in the AI technology realm!

we'll keep you in the loop with everything that's trending in the tech world.