Artificial intelligence | 14 Feb 2024 | 19 min

Decoding LLMs: Understanding the Spectrum of LLM from Training to Inference

Today, the landscape of technology is buzzing with the remarkable advancements of Generative AI. This innovative technology not only exhibits tremendous growth potential but also offers a ton of capabilities for businesses to maximize their potential. Within GenAI lies a transformative power known as Large Language Models (LLMs) that have taken the whole world by storm.

Read a blog on the evolution of Multimodal GenAI.

In simple words, think of LLMs as a type of AI program that can not only turn your simple idea into a Shakespeare-like writeup with just a few prompts. They utilize deep learning techniques along with human input to proficiently execute a diverse range of natural language processing (NLP) tasks.

Sounds literally like a playground, right?

To get into this field, you should learn how to train LLMs, which is seen as a highly advanced language skill to have.

So, in this blog, I’ll guide you in training LLMs accurately. You’ll also gain insights into fine-tuning methods and inference techniques to develop and deploy strong LLMs, enhancing your business efficiency.

Let’s start!

Training LLMs involves 3 major steps. They are:

Learn about each step as you scroll:

1. Data Preparation & Preprocessing: The success of LLM training hinges on ample high-quality text data. The initial training involves gathering diverse natural language text from sources like Common Crawl, books, Wikipedia, Reddit links, code, and more. This variety enhances the model’s language understanding and generation, thus, boosting the performance and adaptability of LLMs.

As we progress, the data must undergo preprocessing to construct a comprehensive data corpus. The effectiveness of data preprocessing significantly influences both the performance and security of the model.

Note: Key preprocessing steps include – quality filtering, deduplication, privacy scrubbing, and the removal of toxic and biased text. These measures ensure that the training data is clean and high-quality, ultimately enhancing the model’s overall success.

2. Pre-Training Tasks: LLMs can learn language by practicing on large datasets from the internet. They use self-supervised tasks, like predicting the next word in a sentence. This helps them get better at vocabulary, grammar, and understanding text structure. As they keep doing this, the model becomes filled with linguistic knowledge.

3. Model Training: This refers to finding the right settings for a machine learning model. These are the types of training that you can follow:

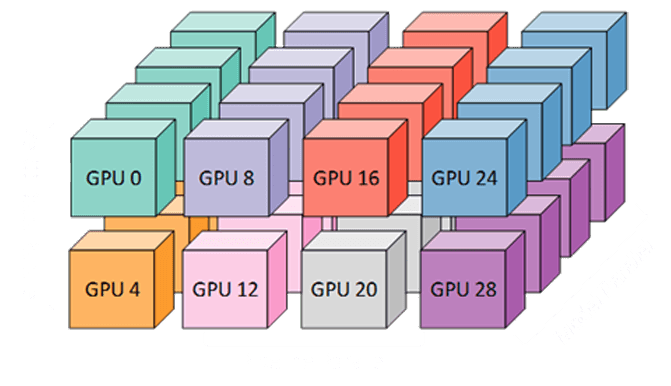

Fig: Parallel Training

Now that you know how to train LLMs, let’s explore ways to make them work better on specific tasks while keeping their language skills intact.

LLMs are fine-tuned through 4 main approaches, each uniquely tailored for specific enhancements. Such as:

This table will help you navigate through each of these methods:

| Approach | Supervised Fine-Tuning (SFT) | Alignment Tuning | Parameter-Efficient Tuning | Safety Fine-Tuning |

|---|---|---|---|---|

| Concept | Adjusts LLM on labeled data for a specific task | Fine-tunes LLM for “helpfulness, honesty, and harmlessness | Minimizes computational cost by fine-tuning only a subset of parameters | Enhances safety by addressing LLM risks and ensuring secure outputs |

| Applications | Common for various NLP tasks like sentiment analysis, text classification, and QnA | Addresses issues like bias, factual errors, and harmful outputs in LLMs | Enables LLM fine-tuning on resource-constrained hardware, suitable for training with limited resources | Reduces risks of biased, harmful, or misleading outputs. Thus, promoting the ethical use of LLMs |

| Pros | Effective for specific tasks, improves accuracy and controllability | Promotes responsible and safe LLM usage. Thus, building trust in generated outputs | Reduces costs and computational demands. Thus, allowing efficient fine-tuning on smaller devices | Mitigates LLM risks like bias, factual errors, and harmful content |

| Cons | Requires labeled data preparation, which can be computationally expensive | Requires human feedback data collection and can be more complex to implement | May not match full finetuning’s performance and requires specialized techniques like LoRA or Prefix Tuning | Requires effort to create and curate safety demonstration data |

When we talk about fine-tuning, we must remember that certain frameworks can improve LLM accuracy using GPU, CPU, and NVMe memory. Keep reading to know!

Here are the various frameworks that can be used:

Besides these, Colossal-AI and FastMoE, alongside popular frameworks like PyTorch, TensorFlow, PaddlePaddle, MXNet, OneFlow, MindSpore, and JAX, are widely used for LLM training with parallel computing support.

Let’s now proceed to assess LLMs!

These powerful models bring great capabilities and responsibilities. Evaluation has become more complex, requiring a thorough examination of potential issues and risks from various perspectives. Here are the evaluation strategies you can follow:

1. Evaluation by Static Testing Dataset: To evaluate LLM effectively, use appropriate datasets for validation, such as GLUE, SuperGLUE, and MMLU. XTREME and XTREME-R are good for multilingual models. For multimodal LLM in computer vision, consider ImageNet and Open Images. Utilize domain-specific datasets, such as MATH and GSM8K for mathematical knowledge and MedQA-USMLE and MedMCQA for medical knowledge.

2. Evaluation by Open Domain Question Answering: LLMs improve question-and-answer realism to mimic humans. Evaluating Open Domain Question Answering (ODQA) is crucial for user experience. Mostly, we use datasets like SquAD along with F1 score and Exact-Match accuracy (EM) metrics for evaluation. However, F1 and EM can have issues, leading to the need for human evaluation, specifically when ground truth is not in predefined lists.

3. Security Evaluation: To ensure the potential biases, privacy protection, and adversarial attacks, specific considerations are being followed:

Did you know? Automated evaluations (BLEU, ROUGE, BERTScore) quickly assess LLMs but struggle with complexity. Manual evaluations by humans are reliable but time-consuming. Combining both methods is essential for a complete understanding of model performance.

You’ve almost mastered training and evaluating LLMs. To maximize your LLM skills, embrace these final best practices.

LLM sizes can grow almost 10 times yearly, leading to significant computational and carbon footprint concerns. To address this issue and reduce the cost of training LLMs, you must follow inference optimization.

What’s that?

Well, think of it as a way to make models work better and use less energy. Here, are the techniques you can use:

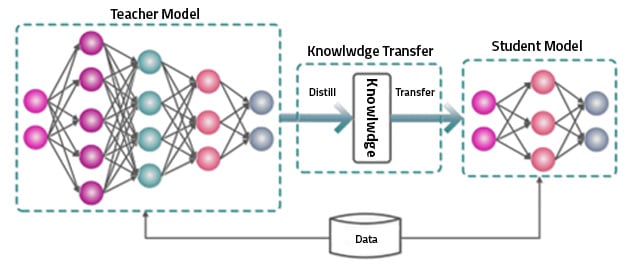

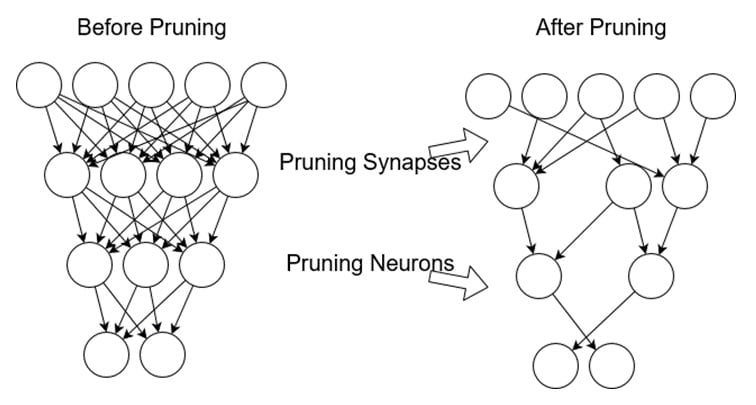

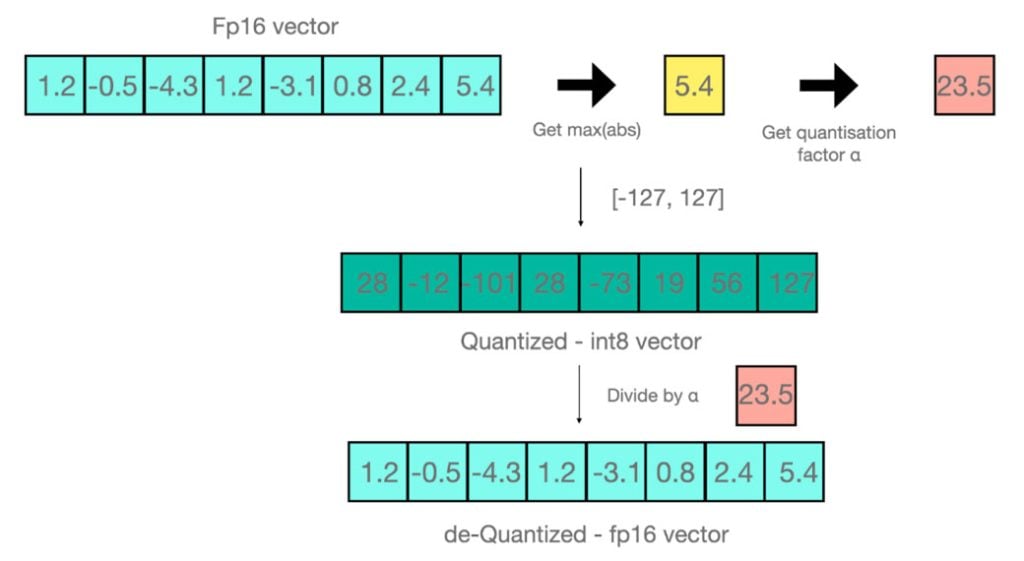

1. Model Compression: This can be achieved by various types mentioned below:

Fig: Knowledge Distillation

Fig: Model Pruning

Fig: Model Quantization

2. Weight Sharing: Weight sharing in large language models involves utilizing a common set of parameters across multiple components. By avoiding distinct parameters for each part, this method enhances computational efficiency, mitigates overfitting, and proves beneficial in scenarios with limited data, minimizing the parameters that require learning.

3. Low-rank Approximation: Low-rank decomposition of a large language model involves factorizing its weight matrix into low-rank approximations, reducing computational complexity while retaining essential information. This enables more efficient training and deployment without sacrificing model performance.

Bonus: 2 technical intricacies to remember during inference optimization:

So, these frameworks serve as fundamental pillars, offering crucial infrastructure and tools for the seamless deployment of LLM models across a spectrum of applications.

For example: ChatGPT marks a significant turning point in the realm of Large Language Models, influencing a variety of tasks. Therefore, businesses must deepen their understanding to navigate this dynamic field and promote innovation in developing Large Language Models to stay ahead.

If you wish to learn more about LLMs and other cutting-edge technologies, feel free to reach out to us at Nitor Infotech.

we'll keep you in the loop with everything that's trending in the tech world.