Artificial intelligence | 05 Jun 2019 | 6 min

Offline Face Detection & Comparison for the Insurance domain using FaceNet

The insurance industry is estimated as most competitive but a less predictable domain. Insurance policies safeguard against uncertainties and hence are more prone to risks. Therefore, it has always been dependent on statistics. Certainly, the insurance companies have been benefited from data science applications.

One of the most interesting use case we came across is face detection in images and comparison of two or more images to predict whether faces identified in both images are of the same persona.

The detailed requirement is that an insurance executive should be able to capture both images from his/her mobile device. The device will then process those images and compare personas in images to provide outcome.

Despite significant recent advances in the field of face recognition, implementing face verification and recognition efficiently presents challenges to current approaches. Hence, we choose to use the FaceNet system to measure face similarity. Firstly, we have used CNN to identify faces. Secondly, we have used FaceNet to get vector mapping of images and then compare those vector to predict similarity.

What is Facenet?

FaceNet is a system that directly learns a mapping from face images to a compact Euclidean space where distances directly correspond to a measure of face similarity. Once this space has been produced, tasks such as face recognition, verification, and clustering can be easily implemented using standard techniques with FaceNet embeddings as feature vectors.

FaceNet maps a face into a 128D Euclidean space. The L2 distance (or Euclidean norm) between two face embeddings corresponds to its similarity. This is exactly like measuring the distance between two points in a line to know if they are close to each other.

The FaceNet model is a deep convolutional network that employs triplet loss function. Triplet loss function minimizes the distance between a positive and an anchor while maximizing the distance between the anchor and a negative.

Why FaceNet?

FaceNet performs really well on real images. It builds on the Inception ResNet v1 architecture and is trained on the CASIA-WebFace and VGGFace2 datasets. FaceNet’s weights are optimized using the triplet loss function, so that it learns to embed facial images into a 128-dimensional sphere.

A Complete Solution

This is how we ensured a flawless implementation of FaceNet:

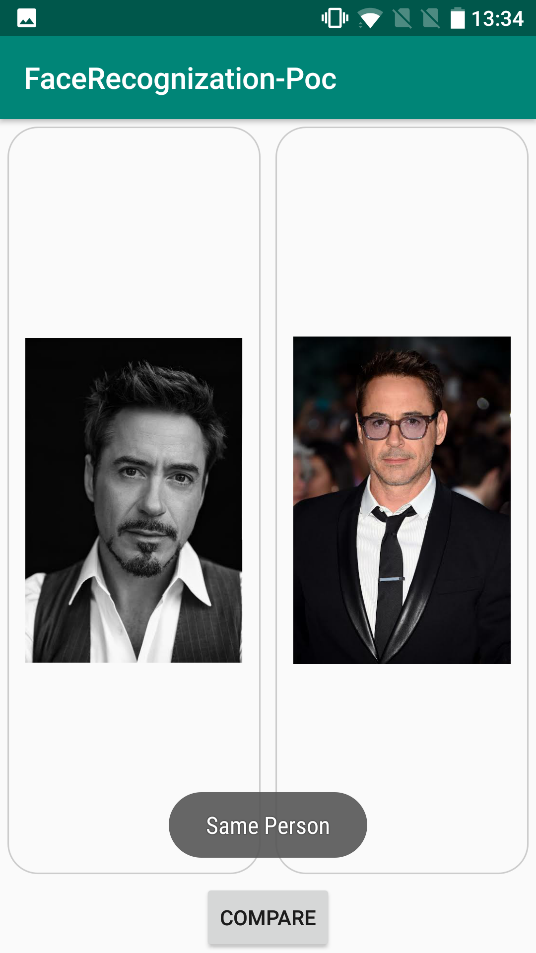

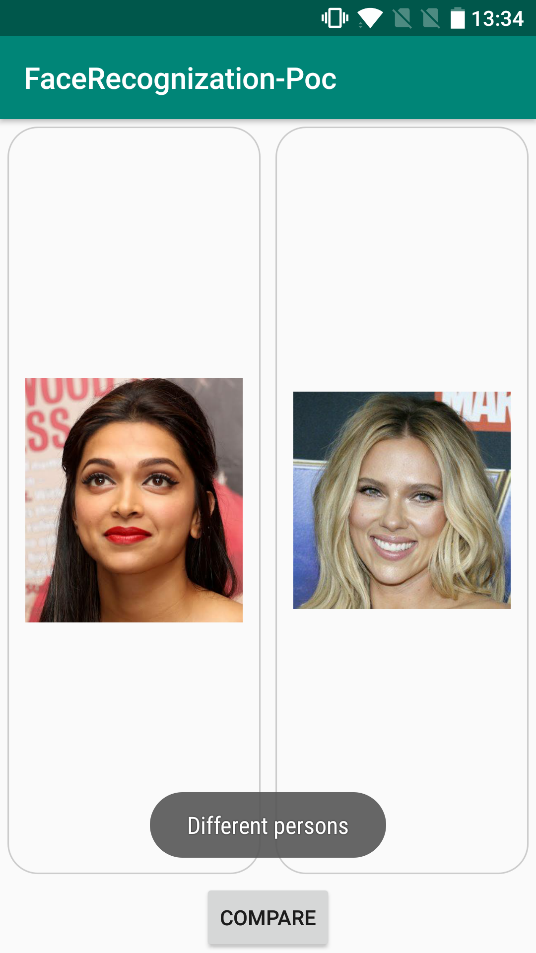

| Screenshots of matches | Screenshot of no matches |

|  |

Conclusion:

Face technology is making its way into the mainstream. Furthermore, there is a growing list of companies offering developer friendly face API through their SaaS platforms, making it a lot easier and cheaper to incorporate state-of-the-art face technology into different types of products.

By now, you should be familiar with how face recognition systems work and how to make your own simplified face recognition system using a version of the FaceNet network.

we'll keep you in the loop with everything that's trending in the tech world.