Big Data and Analytics | 19 Oct 2022 | 25 min

Everything You Need to Know About Elasticsearch

Ask any experienced full stack developer to define Elasticsearch and they will most likely fumble. Very few will be able to give you a concise explanation of what it truly means. So, with that in mind, I decided to pen down this blog and give you a comprehensive idea about Elasticsearch and everything that comes with it.

I’m sure you are aware of the fact that for any modern software application, search and analytics are some of the key features. Mobile apps as well as web and data analytics applications require scalability and must be capable of handling massive volumes of real-time data effectively. Today, a fairly basic application has usability features such as auto complete, search suggestions, location search, etc. And what is the power behind these experiences? You guessed it right- it’s Elasticsearch!

Simply put, Elasticsearch is a full-text search engine based on the Apache Lucene library. It stores data in json document, so it is schema-free. If you need an extensive search functionality or have a website that needs to perform heavy search, then using Elasticsearch is your best bet. Not only that, but it’s also very versatile. Here’s a list of domains in which you can use Elasticsearch. Keep in mind that these are just the popular ones (also at the top of my mind).

Elasticsearch is extremely powerful and has lots of use cases owing to which I have already sung its praises in my introductory comments. But the main reason why I believe it should be a no-brainer for businesses to choose Elasticsearch is the ease with which you can integrate it with your existing systems following which it can be used to perform search/analysis on any data. Not just that, it will give you search results in real-time.

So, without further ado, let us look at some use cases where Elasticsearch can be put to use:

1. Logging and log analysis

2. Security analysis – In addition to existing firewalls, we can integrate it to the IDS (Intrusion Detection System).

3. Due to a rest-based approach, Elasticsearch can be easily integrated with a system that can make API.

4. It also comes with an entire set of tools (Logstash, Kibana, etc.) making it more automated and convenient to use.

Before I delve into the details, here is how you can install Elasticsearch:

1. Download and unzip where you want to place Elasticsearch

2. Run bin/elasticsearch (or bin\elasticsearch.bat on Windows)

3. Run curl http://localhost:9200/ or Invoke-RestMethod http://localhost:9200 with PowerShell

4. Dive into the Getting started guide and video.

Generally, Elasticsearch comes into the picture whenever there are large volumes of data. In order to effectively use this data, we should create an index in it. An index in Elasticsearch is like a database that is seen in a relational database. It has the capacity for mapping, which defines multiple types of data. We can also say that an index is a logical namespace which maps to one or more primary shards and can have zero or more replica shards.

So, from the aforementioned statements, we can infer two things- first, an index is a type of data organization mechanism which allows the user to partition data a certain way. The second concept relates to replicas and shards.

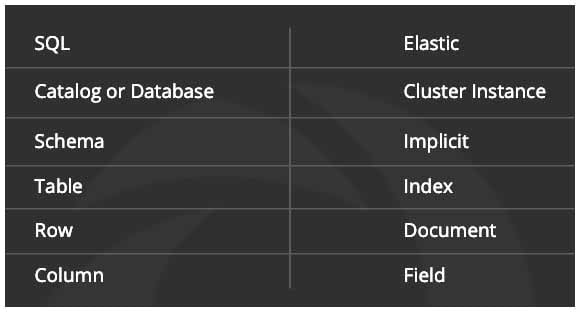

Now, let’s understand how you can map Elasticsearch with Relational DB. When we come from a relational database, we find it difficult to understand the terminology.

But, don’t worry. I will take you through some sample queries that will help you get a grasp on it.

Before I do that, let’s take a look at how you can create an index. While creating the index we need to keep the aspect of mappings in mind. If mappings are good, then querying indices becomes more fun.

Creating an index on Elasticsearch is the first step towards leveraging the stellar power of Elasticsearch. Elasticsearch has an in-built feature that will auto create indices. When you put data into a non-existent index, it will create that index with mappings referred from the data we pushed.

Elasticsearch provides http endpoint using which we can create index. We can use Kibana service as well for creating indices. Here is a sample example to create employee index:

- curl -X PUT "localhost:9200/employee?pretty"

Each index created can have specific settings associated with it, as below:

PUT /employee

{

"settings": {

"number_of_shards": 1

},

"mappings": {

"properties": {

"name": { "type": "text" }

}

}

}

Searching from an index is quite simple in this, similar to when we make an API call. Give URL, request parameter and we are done.

URL : GET employee/_search

Request body

{

"query":{

"match":{

"name":"Mark"

}

}

}

Let’s break it down.

Now what if I want to search against multiple fields, our request body changes as follows:

Request body

{

"query":{

"multi_match":{

"query":"Mark"

"fields":["first_name","last_name"]

}

}

}

As I said, it is pretty simple to understand and frame a query.

Now let’s see what are the different query types that we can use in Elasticsearch. Basically, in Elasticsearch the searching mechanism is done using query based on JSON.

There are two things with which a query can be written in ES- Leaf query clauses & Compound query clauses.

Leaf query clauses – It uses match, term or range, which looks for a specific value in a specific field.

Compound Query Clauses – It’s a combination of leaf query and other compound queries to extract the desired output.

The different types of queries are as follows –

This is the most basic query. It returns all the content with a score of 1.0 for every object.

{

"query": {

"match_all": {}

}

}

{

"query": {

"match_none": {}

}

}

These queries are used to search a full body of text like a chapter or a news article.

The queries in this group are:

A. match query

This query matches a text or phrase with the values of one or more fields

{ "query": { "match": { "rating":"4.5" } }}

It’s similar to the match query but it is used for matching exact phrases or word proximity matches.

{"query": {"match_phrase":{"message":"this is a test"}}}

It is like search-as-you-type. Like the match phrase query but does a wildcard search on the final word.

{"query": {"match_phrase_prefix":{"message":"quick brown f"}}}

This query matches a text or phrase with more than one field.

{"query": {"multi_match":{"query":"query String","fields":["field1","field2"]}}}

A more specialized query that gives more preference to uncommon words.

{"query": {"common": {"body": {"query": "this is string ","cutoff_frequency":0.001}}}}

This query uses query parser and query string keyword.

{ "query": { "query_string":{ "query":"string" } }}

A simpler, more robust version of the query string syntax suitable for exposing directly to users.

{"query": {"simple_query_string":{"query":"\"fried eggs\" +(eggplant | potato) -frittata","fields":["title^5","body"],"default_operator":"and"}}}

These queries mainly deal with structured data like numbers, dates, and enums.

{

"query": {

"term": {

"zip": "176115"

}

}

}

This query is used to find the objects having values within a range. For this, we need to use operators such as −

Ex.

{

"query": {

"range": {

"rating": {

"gte": 3.5

}

}

}

}

– These queries are a collection of different queries merged with each other by using Boolean operators like and, or not or for different indices or having function calls etc.

Ex.

{

"query": {

"bool" : {

"must" : {

"term" : { "state" : "UP" }

},

"filter": {

"term" : { "fees" : "2200" }

},

"minimum_should_match" : 1,

"boost" : 1.0

}

}

}

These queries deal with geo locations and geo points and help to find out locations or an object near a location.

Ex.

{ "mappings": { "properties": { "location": { "type": "geo_shape" } } }}

Now, let’s see how we can facilitate integration in YII2 framework.

We can integrate it in any framework or application which is capable of making an HTTP request. Yii2 is easy to understand, and we can start development quickly. It is built using MVC pattern and it has rich support of extensions which we can use.

Now, we will see how we can integrate an extension in Yii2. We are going to keep it simple in order to understand. The extension used for this is yiisoft/yii2-elasticsearch. This extension is good for basic search support, and it can work as ActiveRecord to query indexes. PHP7 with elastic 7.12 is used on windows 10. Perform the steps given below to integrate it in a project:

Download Elasticsearch and extract it at your desired location. From that location we can run this service using bin\elasticsearch.bat command.

Install Elastic extension using composer. Use the following composer command for this:

composer require –prefer-dist yiisoft/yii2-elasticsearch:”~2.1.0″

a. To use this extension, create file elk.php in config/elk.php and add the following code in it:

return [ 'class' => 'yii\elasticsearch\Connection', 'nodes' => [['http_address' => '127.0.0.1:9200'],], 'dslVersion' => 7, ];

b. Include the above line in config/web.php

$elk = require __DIR__ . '/elk.php'; 'components' => [ 'elasticsearch' => $elk, … ],

c. Enable debugging by adding the following code in config/web.php. This can be enabled only for development config:

$config['modules']['debug'] = [ 'class' => 'yii\debug\Module', 'panels' => [ 'elasticsearch' => [ 'class' => 'yii\\elasticsearch\\DebugPanel', ], ], // uncomment the following to add your IP if you are not connecting from localhost. //’allowedIPs’ => [‘127.0.0.1’, ‘::1’], ];

d. We can write queries by making respective model as per index and write query as we write for active record query:

$result = self::find()->query([‘match_phrase’ => [‘fName’ => $name]])->asArray()->all();

This statement will make a respective elastic query:

{

"size":10,"query":{

"match_phrase":{

"fName":$name

}

}

}

After executing this query, it will return the record as an array.

Here’s an algorithm used for simple search.

The whole search process can be divided into 4 steps.

Fuzziness introduces more search results as it considers all data that is likely to be relevant to input search. In simple terms after introducing Fuzziness, we can even search for spelling mistakes in text. So, if in index we have text “mike” and in searching we have text “mick” then it will return a response of “mike”.

ELK is mostly used for log analysis as well as for big data analysis. So, with the combination of these ELK stacks, we can monitor, troubleshoot, and secure our data.

In today’s world, organizations cannot afford a downtime or load time issue in their applications. Slow performance issues can damage their competitive edge and in the end it directly affects the business.

To ensure applications are always available and secure, IT engineers depend on the different types of data generated by their applications and the infrastructure it supports. So, this data can be in any form – either event logs or metrics or both – but it helps monitoring the systems and helps to identify & resolve issues if they occur. Logs are always there so we just need to have some tools to analyze them. This is where the centralized log management and analytics solutions such as the ELK Stack come into the picture. It allows engineers, whether DevOps, IT Operations or SREs, to gain the visibility they need and ensure apps are always available and performant.

1. Download Elasticsearch, Logstash, and Kibana.

2. Extract all the downloaded files.

3. To set it up on Windows follow these steps –

4. If everything is working seamlessly, Logstash will return your message with an appended timestamp and IP.

The Elastic Stack is the next evolution of the ELK Stack. It will be the same, open-source product, only better integrated, more powerful and easier to get started to do great things with more flexibility.

In conclusion, if we have a website that needs to perform a lot of searches or search a lot of logs and prepare analytics, Elasticsearch is the way to go!

However, we cannot finish discussing Elasticsearch without elaborating on Logstash and Kibana. Let’s keep these two topics for my next blog. By now, I’m sure I have convinced you but if you want to dig a little deeper, reach out to us at Nitor Infotech and learn more about our Mobility services.

we'll keep you in the loop with everything that's trending in the tech world.