Artificial intelligence | 20 Jul 2022 | 12 min

AWS Machine Learning: Part 2: SageMaker

In my previous blog, we broadly understood what AWS is and how it provides machine learning as a service. These services give us a lot of flexibility to scale up or down computational resources whenever required. One of the most important services provided by AWS is AWS SageMaker. It basically gives developers and data scientists the ability to build, train, test, and deploy machine learning models into production with minimum effort and at a lower cost.

In today’s blog, allow me to acquaint you with AWS SageMaker.

It is a fully managed cloud-based machine learning service, and it is made up of three different capabilities-

Let’s go through each of them one by one in brief.

Build

In SageMaker, we don’t have to worry about installing Python or Anaconda, as everything is taken care of by AWS. We can use all our favorite algorithms in this environment. SageMaker also supports preconfigured popular frameworks like Tensorflow and Pytorch in an optimized way. We can also bring custom algorithms and train on SageMaker.

Train

One biggest advantage of SageMaker is it helps in distributed training across single or multiple instances. It manages all the compute resources, which if needed, can scale petabytes of data. In the end, after training our model, SageMaker saves our model artifacts in S3 bucket.

Deploy

To get predictions, we can use real-time predictions and batch predictions. Real-time inference is ideal for inference workloads where you have real-time, interactive, low latency requirements. They are fully managed and support autoscaling. For non-interactive use cases, we can use batch transform. In batch predictions we are not worried about a real-time endpoint or latency performance. SageMaker manages all the resources required for batch inference.

An instance is basically a virtual server in cloud. An Amazon SageMaker notebook instance is a ML compute instance running the Jupyter Notebook App. SageMaker offers instances in four varieties-

Standard family are lowest cost instances which provide balanced CPU and memory performance. Examples are T2, T3, M5.

T type are good for notebook and development systems. M type are higher type instances suitable for CPU intensive model training and hosting.

Compute family are latest generation CPUs with higher performance. Examples are C4 and C5

These are suitable for CPU intensive model training and hosting.

Accelerated Computing Family are powerful GPUs. Examples are P2 and P3.

These instances are highly priced over other families. However, algorithms which are tuned for GPUs train faster in this family of instances.

Inference Acceleration is different than the above-mentioned three families. They can be added to other families of instances.

Ideally, it is recommended to choose an instance type based on the type of algorithms we are using. For example, scikit learn machine learning algorithms are CPU-based, hence we can use instances optimized for CPUs. So, the best thing we can do is to choose a family first and then experiment around with various instance sizes.

SageMaker has multiple components in pricing. It varies according to the instance type and size we choose. There is also a pricing component in storage allocated to the instance. Along with this, there is a variational cost involved in fractional GPUs. There is also a pricing component for data transfer in and out of the instance. In the end, the pricing also varies a bit around AWS regions. Here is the link to the pricing. The major components in development, training and inference are-

Now that we have understood what SageMaker is and how the pricing works, let’s take a look at the algorithms and frameworks that are available to us.

SageMaker gives us four different options of training and hosting models.

These are the algorithms provided by SageMaker. They are highly optimized for AWS Cloud and can easily scale up. The algorithms provided by SageMaker are-

K-nearest Neighbours, Linear Learner, Xgboost, LightGBM, CatBoost

DeepAR

Image Classification, Semantic Segmentation

Seq to Seq, Blazing Text, Object2Vec

K-means, LDA, Neural Topic Model, Principal Component Analysis, Random Cut Forest,

IP Insights

Factorization Machines

You can get details about these algorithms here.

We can train our model in AWS SageMaker in multiple ways.

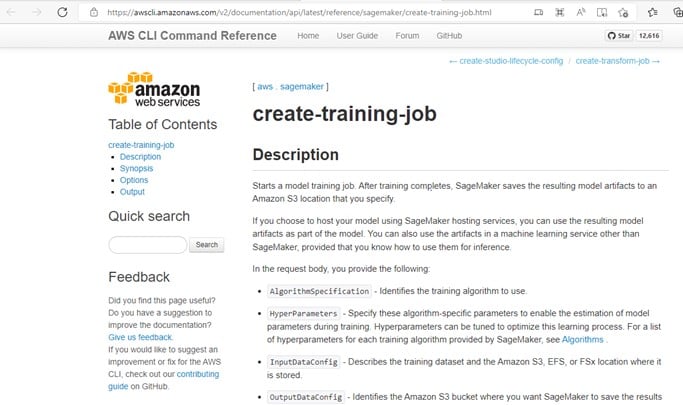

1. AWS CLI

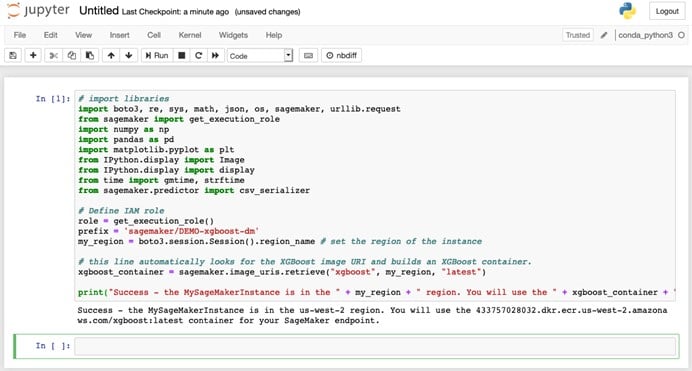

3. Model Coding

We can use any of the three ways to build our model. We can code the model from end to end, use the AWS command line tool to pass the commands, or use the SageMaker console to train our model.

Here are a few dos and don’ts when it comes to cost saving-

It can be overwhelming as we can see there are a lot of things involved in SageMaker, but if we understand our data, we will be able to figure out what we need in SageMaker. We also need to understand the pricing and how it works – this can benefit us in the long run.

In the next blog, we will try to understand how we can programmatically code in SageMaker. Till then, stay tuned and visit us at Nitor Infotech to learn more about what we do in the technology realm.

we'll keep you in the loop with everything that's trending in the tech world.