Big Data and Analytics | 29 Jun 2022 | 10 min

Data Extraction from SAP

In our technology-focused world, SAP ERP systems are very popular. Irrespective of the size or industry, businesses can reap the benefits of SAP. As you may be aware, it is essential to automate data extraction from SAP. If the idea sounds slightly daunting, it doesn’t need to be.

Remote Function Call (RFC) is the standard SAP interface for communication between SAP systems and other systems, so RFC calls function to be executed in a remote system. There are multiple ways to be developed to extract data from SAP tables. This blog covers the following two approaches to extract data from SAP ECC:

I’m going to share the steps to use PyRFC and SAP NetWeaver SDK. Let’s get started!

SAP NW RFC SDK is the abbreviation for SAP NetWeaver Remote Function Call System Development Kit. This lets you manage the RFCs.

1. Go to the SAP Service Marketplace: http://service.sap.com/support

2. Enter your SAP Service Marketplace username and password. The SAP Support Portal page appears. Here is the screenshot for your reference.

3. Click the ‘Download Software’ icon.

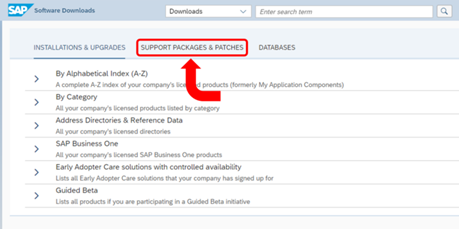

4. Click the ‘Support Packages & Patches’ tab.

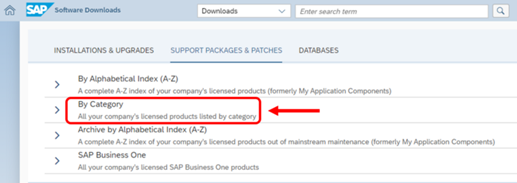

5. Click ‘By Category’ in the list.

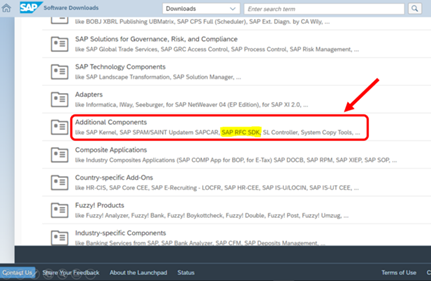

6. Click ‘Additional Components’ in the list.

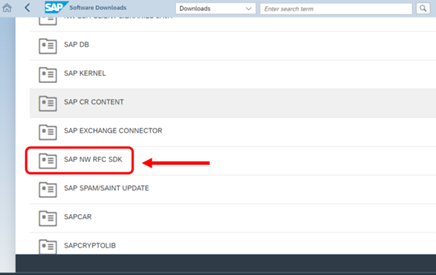

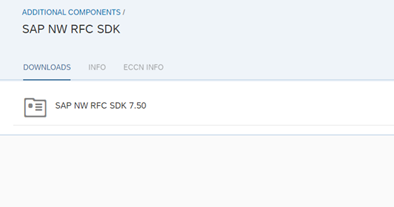

7. Click ‘SAP NW RFC SDK’ in the list.

8. Click on the most recent version of SAP NW RFC SDK.

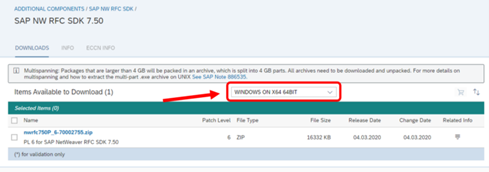

9. Select the operating system for which you want to download.

10. Click the hyperlinked .zip file to download libraries.

11. Extract the files and folders that are inside the .zip to your SAP folder.

12. Install PyRFC Python package with the following commands –

pip install pyrfc pip install pynwrfc

As we have done with pre-requisites, we can start extracting data from SAP tables using Python code.

Extract data from SAP tables and upload the data on Azure Blob Storage as CSV files using the following code snippet.

Import requires modules as mentioned below –

from azure.storage.blob import BlobServiceClient, BlobClient from azure.storage.blob import ContentSettings, ContainerClient from cryptography.fernet import Fernet import pandas as pd import json from pyrfc import Connection from datetime import datetime import pyodbc

Read the SAP connection details from the configuration file kept in Blob Storage.

#Read connection details for configuration table storage_end_point = "https://xxxxx.blob.core.windows.net" storage_account_key = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx" storage_container_name = "configurations" blob_name = "config_connection.json" key = "key.txt"

Establish the connectivity with Azure Blob Storage to read the configuration file.

blob_service_client_instance = BlobServiceClient(account_url= storage_end_point, credential =storage_account_key)

container = blob_service_client_instance.get_container_client(storage_container_name)

blobs = container.list_blobs(name_starts_with=blob_name)

blob = [i for i in blobs][0]

key_blobs = container.list_blobs(name_starts_with=key)

key = [i for i in key_blobs][0]

result = container.get_blob_client(blob).download_blob().readall()

result_json = json.loads(result.decode('utf8').replace("'",'"'))

Get SAP Server connection details from the configuration file.

print("-----Getting SAP Server details from configuration file------")

ashost = result_json['asHost']

sysnr = result_json['sysnr']

client = result_json['client']

user = result_json['user']

Decrypt the password to connect SAP.

print("------Decrypting SAP PWD------")

pwd = str.encode(result_json['sapPWD'])

key_result = container.get_blob_client(key).download_blob().readall()

fernet = Fernet(key_result)

decpwd = fernet.decrypt(pwd).decode()

decdbpwd = fernet.decrypt(dbpwd).decode()

Prepare connection string to connect the SAP server.

conn = Connection(ashost=ashost, sysnr=sysnr, client=client, user=user, passwd=decpwd)

print("------Connected SAP Successfully------")

Query SAP table using Remote function call RFC_READ_TABLE.

data = conn.call('RFC_READ_TABLE', QUERY_TABLE='KONV', DELIMITER='|', OPTIONS=options, FIELDS=['KNUMV', 'KPOSN', 'KSCHL', 'KWERT'], ROWSKIPS = rowskips, ROWCOUNT = ROWS_AT_A_TIME)

Write the data to Blob Storage in the form of a CSV file.

data_fields = data['DATA'] if not has_header: data_names = data['FIELDS'] headers = [x['FIELDNAME'] for x in data_names] for i in range (0, len(headers)): column_name=headers[i] if i == 0: header_str = column_name else: header_str = header_str+"|"+column_name csv_str = header_str has_header = True for row in data_fields: csv_str=csv_str+'\n'+row['WA'] blob_client = blob_service_client_instance.get_blob_client(container='sapdata', blob='KONV.csv') blob_client.upload_blob(csv_str, overwrite=True)

1. Download SAP connector for .Net from SAP website and install it on the virtual machine which can access the SAP server. This is required to establish the connectivity between SAP and Azure Data Factory through integration runtime.

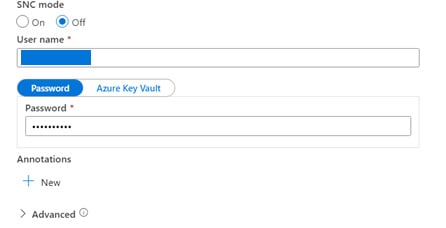

2. Create a Linked Service in Azure Data Factory with all the required connection details as shown below.

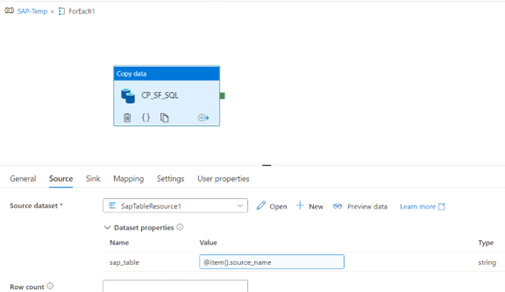

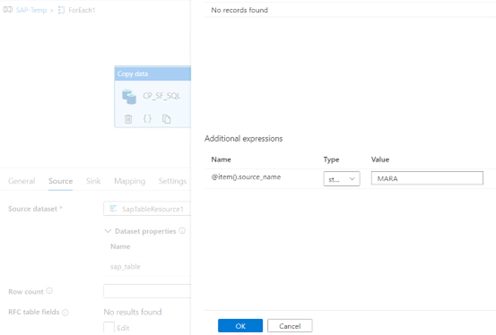

3. Copy activity to copy the data from the SAP table in Azure Data Factory.

Preview the result of any of the SAP tables before copying to any of the destinations.

Shown below is the screenshot for the sample data from the SAP table.

There you have it! These are the ways to extract data from SAP using NetWeaver SDK + Python and the SAP connector for .Net + Azure Data factory.

Send us an email with your thoughts about this blog and visit us at Nitor Infotech to understand how we partner with businesses to enhance their journeys of digital transformation. Also click here to discover what we offer in the realm of big data technology.

we'll keep you in the loop with everything that's trending in the tech world.