In the rapidly evolving field of Generative AI (GenAI), innovation is at the core of technological growth, and you must have heard umpteen times that your organization must adapt to changing demands and changing landscapes.

What’s more, optimizing your generative AI model for peak performance is crucial.

In this blog, we are going to dive into how to fine-tune your generative AI model in five essential steps.

Well, without further ado, let’s get started!

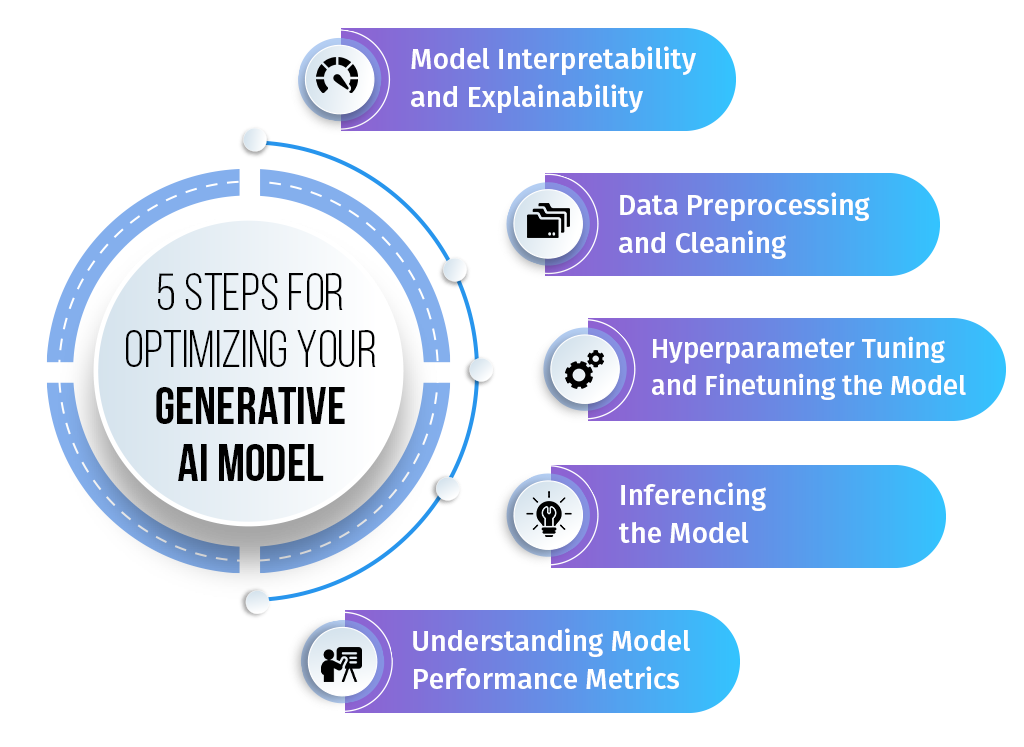

Fig: 5 Steps for Optimizing Your Generative AI Model

The first step is…

1. Model Interpretability and Explainability

- Understanding Model Decisions: Interpretability is about opening the black box of AI. It’s crucial for trust and debugging.

- Trade-offs: Complex models can be powerful but inscrutable. Strive for a balance where your model is both potent and understandable.

- Tools for Clarity: Employ tools like SHAP and LIME for insights into individual predictions and use feature importance plots to see what drives your model.

Here is a link you can head to, if you’d like to explore the Stanford NLP Python Library for understanding and improving PyTorch models via interventions.

Time to move to the second step…

2. Data Preprocessing and Cleaning

- Significance of High-Quality Training Data: Garbage in, garbage out. This fundamental principle in data science emphasizes that the quality of input data directly determines the quality of the output. The quality of your training data sets the ceiling for your model’s performance. If the training data is flawed or of low quality, the model’s ability to generate accurate or coherent outputs is severely limited. So, investing time in ensuring high-quality training data is essential for setting a high-performance ceiling for your model.

- Techniques for Handling Data Issues:

-

- Address missing values with imputation techniques.

- Tame outliers with robust scaling.

- Silence noisy data with smoothing methods.

- Role in Model Performance: Clean data acts as a foundation for the model to learn effectively. It’s akin to a well-tuned instrument in an orchestra. Each note (data point) must be clear and in harmony with the rest for the symphony (model output) to reach its full potential. By ensuring data is pre-processed and cleaned, you’re setting the stage for your generative AI model to perform at its best. This allows it to produce high-quality, innovative content that reflects the patterns and structures present in the training data.

How to generate instruction sets:

Let’s look at two examples.

- Alpaca: Alpaca is a strong, replicable instruction-following model fine-tuned from Meta’s LLaMA 7B model. It behaves similarly to OpenAI’s text-davinci-003 but is surprisingly small and easy to reproduce. It’s designed for academic research and aims to address deficiencies in existing instruction-following models.

- Dolly: Dolly, developed by Databricks, is a large language model. To use it, you can create a pipeline with the transformers library. Dolly is versatile and can answer instructions effectively.

Well, let’s look at the third step.

3. Hyperparameter Tuning and Finetuning the Model

- Understanding Hyperparameters: Hyperparameters are the dials and switches of your generative AI model. They shape the learning process and can dramatically affect performance. Certain instances of hyperparameters are:

-

- The number of hidden layers in a neural network

- The number of leaves of a decision tree

- Learning rate of a gradient descent

- The ratio of the training set and test set

- Tips for Effective Tuning: Start with a broad search, narrow down to promising regions, and then fine-tune with precision. Remember, patience is a virtue in hyperparameter tuning.

- Tools for the Trade: Leverage the right tools and consider advanced methods like Bayesian optimization for a more intelligent search. In case you are hearing of it for the first time, Bayesian optimization is a sequential design strategy for global optimization of black-box functions.

Here are a couple of examples of tools you can use:

- LoRA is a low-rank decomposition method designed to reduce the number of trainable parameters in large language models.

- By using LoRA, we can speed up fine-tuning processes and reduce memory usage.

- QLoRA extends LoRA’s capabilities. While default LoRA settings in PEFT add trainable weights to query and value layers within each attention block, QLoRA adds trainable weights to all linear layers of a transformer model.

- Remarkably, QLoRA can achieve performance comparable to a fully fine-tuned model while significantly reducing the number of parameters.

Time to explore what the fourth step involves!

4. Inferencing the Model

Inference is the process of using a trained ML model to make predictions or generate outputs based on new, unseen data.

In the context of generative AI models, inference involves taking an input (such as a prompt or query) and producing a relevant output (such as text, images, or other forms of content) using a pre-trained model.

Efficient inference is crucial for real-world applications, especially when you are deploying models in production systems.

Now, let’s understand a few quantization techniques.

Quantization Techniques

1. GPTQ (Post-Training Quantization)

-

- GPTQ stands for GPT Quantization.

- It’s a post-training quantization method specifically designed for language models like GPT.

- The goal is to make the model smaller while maintaining performance.

-

- Reduced model size.

- Still capable of serving quantized models created using AutoGPTQ or Optimum.

2. Bitsandbytes Quantization

-

- It is a library for applying 8-bit and 4-bit quantization to models.

- Unlike GPTQ, it doesn’t require a calibration dataset or post-processing.

- Benefits of Bitsandbytes:

-

- It enables large-scale models to fit in smaller hardware without significant performance degradation.

- There is no need for calibration data.

- 4-bit Quantization with Bitsandbytes:

-

- Choose from 4-bit float (fp4) or 4-bit NormalFloat (nf4).

Quantization techniques play a pivotal role in optimizing generative models for efficient inference.

Whether you’re serving GPTQ-quantized models or exploring the world of bitsandbytes, remember that smaller models can still pack a powerful punch!

Now, let’s understand the fifth and final step.

5. Understanding Model Performance Metrics

- Defining Success Metrics: Before diving into optimization, it’s vital to define what success looks like for your generative AI model. These metrics will guide your optimization efforts and help you measure progress.

- Common Performance Metrics: Metrics like accuracy, precision, recall, and F1-scores are the compass that guide your GenAI ship.

-

- Accuracy measures the percentage of correct predictions; precision focuses on the relevancy of positive predictions.

- Recall assesses the model’s ability to find all relevant instances.

- Finally, the F1-score balances precision and recall.

- Impact on Product Performance: These metrics are not just numbers; they reflect the user experience. High accuracy ensures reliability, precision maintains relevance, and recall guarantees comprehensiveness.

- Perplexity (PPL): It is a common metric used for evaluating language models, particularly classical ones (also known as autoregressive or causal language models). It quantifies how well a model predicts a sequence of tokens. Essentially, perplexity evaluates the model’s ability to predict uniformly among the specified tokens in a corpus. It’s important to consider tokenization when comparing different models.

- Cross-entropy loss: It is closely related to perplexity. It’s the negative log-likelihood of the true data distribution given the model’s predictions. Exponentiating the cross-entropy yields perplexity.

Well, we are nearing the summing up of this blog…

Optimizing a GenAI model is a journey of balancing precision and generalization, understanding and trust. Follow these steps, and you’ll be well on your way to peak product performance!

As generative AI continues to evolve on its exciting journey, so too must our approaches to product performance, always aiming for the highest standards of quality and reliability.

Send us an email with your views on this blog. Also, visit us at Nitor Infotech to discover details about our prowess in GenAI, machine learning, and artificial intelligence technology. Happy optimizing!

Discover details about our team’s multifaceted capacity in working wonders with Generative AI.

Read Factsheet![]()